Tailoring solutions to meet ethical implications within EU projects

The difference between pushing forward to find solutions and policing to identify consequences

IN-PREP https://www.in-prep.eu/ 2017-2020, is a large innovation action H2020 project in the domain of Crisis Management that focuses on the preparedness phase. The programme seeks to build a training and planning system that prepares emergency practitioners to collaborate effectively across agencies and disciplines for natural and manmade disasters. There are 20 partners from 7 countries with civil protection agencies representing more than a third of the consortium.

As designers of a new architecture for interoperability for cross-border disaster management, IN-PREP has specific ethical and social responsibilities as stewards to those we aim to serve with the final product.

Folding ethical implications into IN-PREP is much more than just asking “how do we make sure the users behave ethically with the system and the data?” It is about drawing the bigger picture, considering both the individual and collective – as they do things in the world – to best consider the role and impact of the technology in social betterment.

Ethics only have meaning based on

- Who is acting

- How they understand the world

- What is going on around them

- What is going on that is making them act

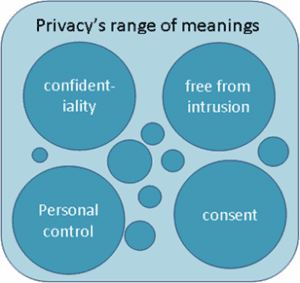

The Privacy example

While it is possible to design a disaster information technology that asks: “Will privacy of this data be maintained?” The answer to the question cannot be reduced to a “yes” or “no”. Privacy, for example, can mean confidentiality, free from intrusion, personal control, or consent. Two people answering ‘yes’ to privacy can be making fundamentally different decisions, potentially leading one to see the other as not meeting their privacy needs.

The Demographics case

When organisations make plans for how to distribute aid they often include demographics as a way to inform their decisions. However, demographics are often political categories that carry with them power asymmetries and cannot be transposed from one place to another.

As a result, the categories might not only be found as irrelevant to the needs of those being served, but they could lead to new forms of judgment, social sorting, or barriers to aid for groups that classify themselves differently. This happened in the U.S. during the 2010 census. Citizens of Mexican heritage, who accounted for more than half of U.S. population growth between 2000 and 2010, could not find a racial category on the survey that fit their understanding of self. Instead, they had to classify themselves as “white” or “some other race” for which each individual created their own definition. The fact that they did not fit into the classification schema diminished this demographic groups’ presence and thus socio-political influence. Such differences in understanding built – even if unintentionally – into data categories can discourage joint responsibility, justice, and democratic participation.

Our aim to innovate in ways that better society is heavily grounded in ethical considerations, from the norms that structure expert decision-making to the need to be inter-cultural and interdisciplinary in order not to perpetuate social power-struggles, injustices, or culture-clashes.

The context of action example

Even commonly accepted security features, while designed for the safety of society, can lead to unintended risks if not considered in the larger context. Speed bumps, for example, force car drivers to slow down, creating greater safety and decreasing loss of life on the road and its surroundings. However, while generally a “good”, they do not leave room for exceptions, like the ambulance trying to get to an emergency for which the speed bumped road is the shortest route – it either has to slow down as it goes over the speed bumps or take an alternative, longer, route. Either way, the speed bumps force it to arrive later to a scene of medical need where time matters.

The ethics of a situation are shaped not only by who is answering, but also by the characteristics of the hazard faced, the scale of emergency, the context of action, and the practices of those responding.

We need to consider how, when, by whom, and to what end a technology or related practice gets used to understand the socio-cultural forces that influence the design process. We must also be aware of the public understanding of their practices and solutions and the extent to which they make the public vulnerable.

Dr Katrina Petersen is a senior research analyst with Trilateral Research Ltd http://trilateralresearch.co.uk/ . Within the IN-PREP project, Trilateral manages the Social, Ethics & Legal Panel as well as Security Scrutiny Board. Their role is to build a responsible research ethic from the start and to mitigate risks that might arise throughout the research process. Trilateral is also developing the ethical, privacy, legal impact assessment throughout the project, ensuring the project establishes a strong framework for responsible design and use.